★Psychological effects and cognitive illusions in AI-assisted programming

There's something odd and new about the subjective experience of AI-assisted programming. It distorts our sense of time passing, difficulty, effectiveness, and joy.

Some of these are well-known psychological effects and illusions, and the psychological theories behind them can help us understand a bit better what's going on.

It's hard to judge how much work the AI did for us

When the AI takes an hour to do something, with all the attendant dead-ends and back-and-forth, it's hard to know how effective it has been.

In general, it's very difficult to objectively measure programming productivity , and we are bad at estimating how long it would have taken us to do.

When we watch the AI try and fail and iterate, we notice every failure and false start along the way. "Gah, you've broken the unit tests again, you foolish robot!" But when coding manually ourselves, we might make the same mistakes but experience them differently. Maybe this is a case of the fundamental attribution error, that we see our own actions as contextualised by the situation, whereas we're more likely to attribute permanent 'dispositions' to others based on their actions. Or a little darker, that we use a victim vs perpetrator narrative: we use a 'perpetrator' narrative for our own mistakes (meaningful and comprehensible, with the incident as a closed, isolated event with no lasting implications) and a 'victim' narrative for the AI's mistakes (seeing the actions as arbitrary and incomprehensible, and portraying the incident in a long-term context with continuing harm and lasting grievances).

We aren't always good judges of how hard things are, what works for us and what doesn't, or reliable in comparing ourselves to others. (For example, there's a lovely psychology experiment where students feel they've learned less from active recall than passive review, even though active recall is actually more effective.) And things always seem simpler after we have understood them, so once we see the AI's solution, it seems obvious.

So for all these reasons, expect to see a lot of people rationalising away even a 10x speedup (1000+ lines of code in a day, rather than <100). After all, "it is difficult to get a man to understand something, when his salary depends on his not understanding it” :~

Context change, and feeling of duration

In an hour of AI-assisted programming, especially with multiple AI agents working in parallel, we might experience multiple distinct events, e.g. discussing ideas, exploring multiple approaches; hitting a dead end; reverting and try again; shipping a couple of small features. Each of these feels like a significant, distinct event.

Our sense of time is heavily influenced by the number of distinct events or changes we experience. As a result, an hour spent like this will feel like it lasts longer than if we'd just been working on a single, undifferentiated problem. Even though you've accomplished more than you might have in a day of traditional coding.

Being knocked out of flow

AI-assisted programming knocks us out of the "flow state" - that magical zone of manageable-but-still-challenging where you lose track of time.

Classic programming provides an almost instant feedback loop: write code, compile, see results, tweak, repeat. Each micro-adjustment feeds into that cycle.

AI-assisted development breaks this pattern. Even a 10-second delay between your instruction and the AI's response can bump you out of flow. Watching the AI iterate feels like watching paint dry - even if it's actually drying in just a few moments.

Eventually, the models will just output so fast that this problem will go away. In the meantime, it helps to run multiple agents in parallel, and switch each time the AI delays (see "Optimise for correctness"). Of course, this creates switch costs that need to be managed...

Weakened reinforcement

'Variable reinforcement' is when you get some kind of reward or positive result some of the time. Like slot machines... or video games, or capricious bosses/partners, or email. This unpredictable signal creates very robust addictive behaviours.

Programming has something of this character. Maybe this time when I hit 'compile', it'll work!

But it's less reinforcing to watch someone someone else get the reward - so when the AI's the one pulling the lever and sometimes getting the cocaine, we miss out on the rush.

It's a different kind of work

While AI reduces the mechanical effort of coding, it increases the cognitive demand of communication. You're constantly making implicit knowledge explicit:

-

Explaining background context

-

Defining success criteria

-

Articulating architectural principles

-

Catching unstated assumptions

It's like the difference between driving a car (flow state, muscle memory) and teaching someone to drive (constant metacognition, explicit instruction).

When AI is writing most of the code, you spend most of your time reading what it wrote, often being spread across various parts of the codebase, and trying to figure out how a series of scattered edits fit into a larger context. And reading code is much more effortful than writing it. I'm not 100% sure why. But it partly explains why it's so much more fun to build from a greenfield than to modify an existing system, and contributes to the illusion that it would be better to start from scratch and rewrite the whole damn thing!

Anybody know of any studies that would explain this?

A new kind of skill

AI-assisted programming is a skill. It might even be a meta-skill, a skill about skills, like teaching or learning to learn. It is multiplied by your own mastery at the task, and your own clarity of thinking, and your own metacognition.

We can expect various non-monotonicities, e.g.

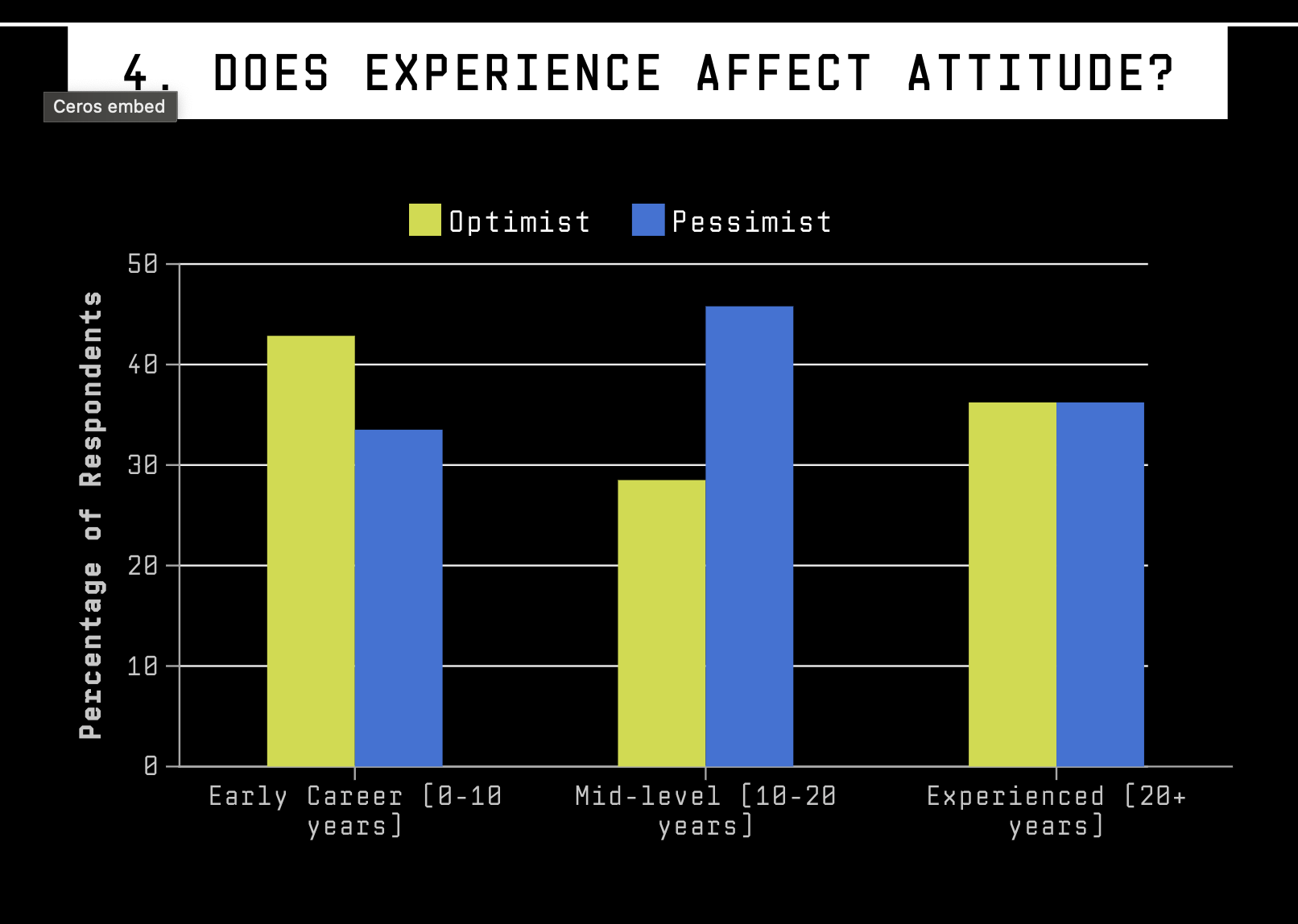

- Seniority: Junior programmers seem most positive about AI, because it levels the playing field for them. Senior programmers seem pretty positive, because their experience & judgment enables them to make effective architecture decisions, without having to worry about semicolons), while mid-level programmers are most negative, because it threatens the craft and low-level skill on which their pride and economic value sit. see ("Does experience affect attitude?")

-

Expertise: This is part of what I'd guess explains the apparent drop in productivity when using AI tools in a recent (mid-2025) study. Expert AI-assisted programming will involve un-learning a lot of existing behaviours & skills, and replacing them with new ones.

-

Verbal: We may find that the best AI-assisted programmers have different skillsets from the best human-only programmers. We may find that teachers and managers and other expert communicators are at a huge advantage.

Where does this leave us?

Even despite all this, I have started to experience a new kind of flow working with AI too. When the context is well-defined, and the guardrails are protective, then the speed at which dreams transform into working code can feel like magic. The impedance between imagination and implementation drops dramatically.

As AI models get faster and we develop better workflows, we might find a sweet spot that combines this new superpower with the satisfying flow of traditional programming. Until then, we're learning to appreciate a different kind of satisfaction: not the micro-dopamine hits of instant feedback, but the macro-achievements of watching our ideas materialise at unprecedented speed.

Discussion on LinkedIn

see LinkedIn discussion & responses